Ilya officially announced his departure, and Jan, the head of Super Alignment, resigned directly. OpenAI is scattered again.

After almost a decade, I have made the decision to leave OpenAI. The company’s trajectory has been nothing short of miraculous, and I’m confident that OpenAI will build AGI that is both safe and beneficial under the leadership of @sama,@gdb,@miramurati and now, under the excellent research leadership of @merettm. It was an honor and a privilege to have worked together, and I will miss everyone dearly. So long, and thanks for everything. I am excited for what comes next — a project that is very personally meaningful to me about which I will share details in due time.

Ilya Sutskever also shared a photo with Sam Altman, Greg Brockman, and Mira Murati.

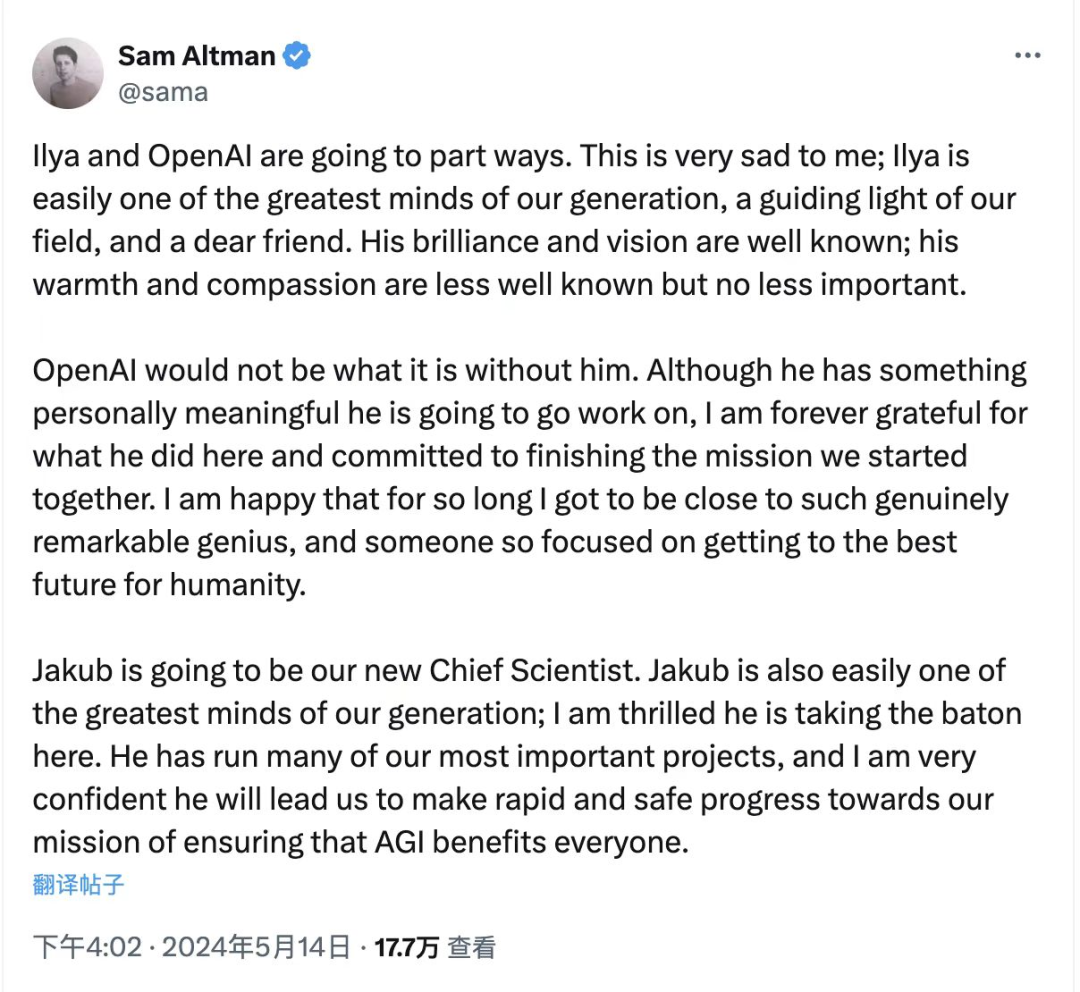

OpenAI CEO Otman expressed on Twitter that it is very sad that Ilya and OpenAI have gone their separate ways.

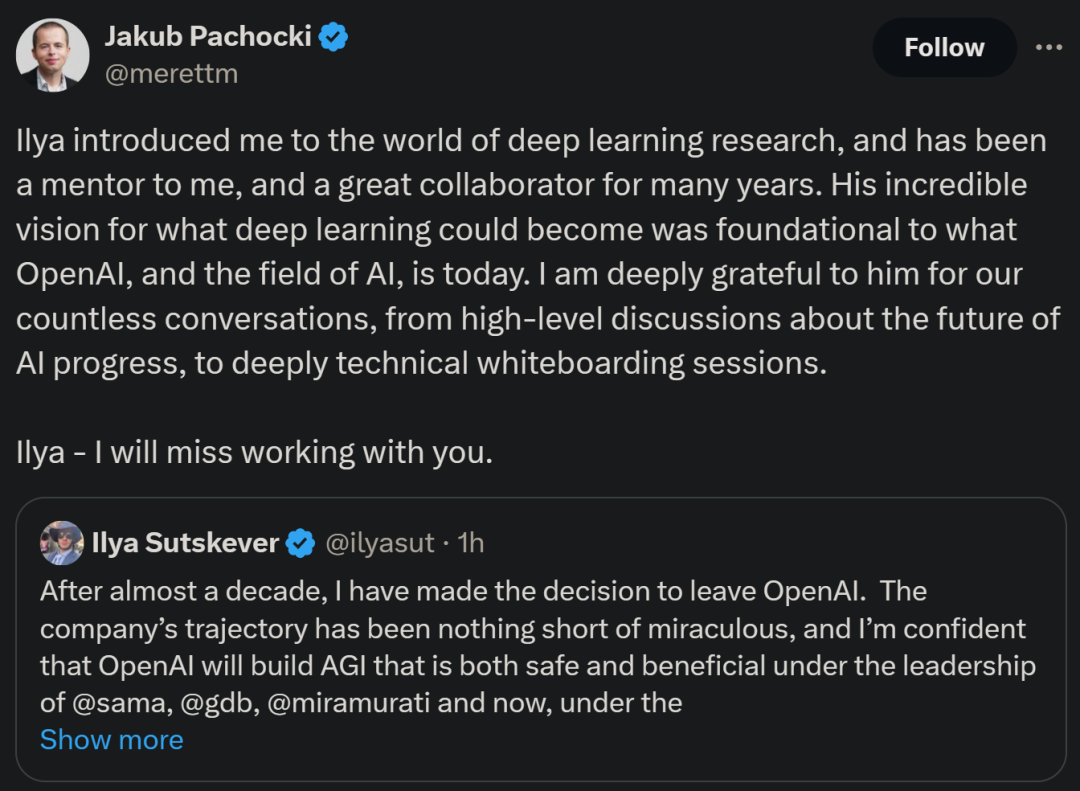

Jakub Pachocki, who is about to become the next Chief Scientist of OpenAI, also expressed his gratitude to his predecessor, Ilya.

According to OpenAI’s official website, the new OpenAI Chief Scientist Jakub Pachocki has a PhD in theoretical computer science from Carnegie Mellon University and has been leading OpenAI’s transformative research program since 2017. Prior to that, he served as OpenAI’s Research Director and was the lead for the development of GPT-4 and OpenAI Five, as well as fundamental research on large-scale RL and deep learning optimization.

OpenAI said, “He has played a crucial role in re-adjusting the company’s vision to expand deep learning systems.”

Thus ends the eight-year story between Ilya Sutskever and OpenAI.

Jan Leike, a co-leader of the Super Align Team, also announced his departure simultaneously with Ilya.

We all still remember that the Super Alignment Team was only established in July 2023, with the goal of “resolving this (alignment) problem by 2027 with the massive computational support of OpenAI.”

Now, with both of them leaving at the same time, they leave behind unfinished businesses. Will “super alignment” feature in Ilya’s next plans?

A Turbulent Childhood, Undergraduate Years under Hinton

In fact, even in a world without OpenAI, Ilya Sutskever would still be immortalized in the annals of artificial intelligence.

Ilya Sutskever is an Israeli-Canadian, born in the former Soviet Union, immigrated to Jerusalem with his family at the age of five (thus he is proficient in Russian, Hebrew, and English) and moved to Canada in 2002.

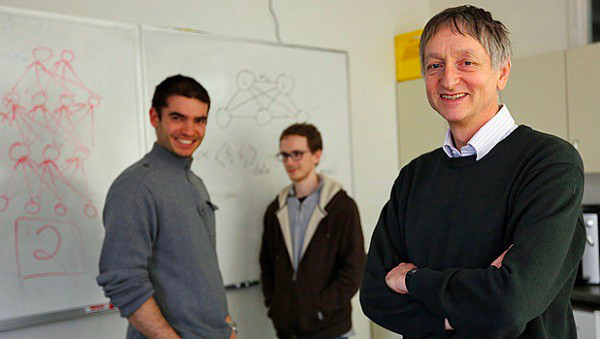

During his undergraduate studies at the University of Toronto, Ilya Sutskever began his cooperation with Geoffrey Hinton in a project called “Improved Stochastic Neighborhood Embedding Algorithm” and later officially joined Hinton’s team when pursuing his Ph.D.

We are all familiar with what happened later: In 2012, Hinton built a neural network named AlexNet with Ilya Sutskever and another graduate student, Alex Krizhevsky. Its ability to recognize objects in photos far exceeded that of other systems at the time.

OpenAI stated, “Ilya has always been very interested in language,” current Google Chief Scientist Jeff Dean said, “He has a strong intuition about the direction things are going.”

At Google, Ilya Sutskever demonstrated how deep learning’s pattern recognition abilities could be applied to data sequences, including words and sentences. He collaborated with Oriol Vinyals and Quoc Le to create the Sequence to Sequence (Seq2Seq) learning algorithm and was deeply involved in the research of TensorFlow. He was also one of the many authors of the AlphaGo paper.

Joining OpenAI, leading the GPT series research

Perhaps his strong interest in language was what propelled Ilya Sutskever to join OpenAI.

In July 2015, Ilya Sutskever attended a dinner hosted by Y Combinator president Sam Altman at a restaurant on Sand Hill Road, where he met Elon Musk and Greg Brockman.

That dinner gave birth to OpenAI. Everyone present agreed on one thing: it needed to be a non-profit organization, with no competitive incentives to dilute its mission, and it required the world’s best AI researchers.

At the end of 2015, Ilya Sutskever began leading the research and operations of OpenAI as “Research Director.” The organization has attracted several world-renowned AI researchers, including “Father of GAN” Ian Goodfellow, UC Berkeley’s Pieter Abbeel, and Andrej Karpathy.

The new company came with a billion dollars in funding from Sam Altman, Elon Musk, Peter Thiel, Microsoft, Y Combinator, and other companies. From the outset, they set their sights on AGI, despite it being not widely accepted at the time.

However, the initial OpenAI struggled. Ilya Sutskever said, “When we started OpenAI, there was a time when I was unsure how we could continue to make progress. But I had a very clear belief – not to bet against deep learning. Somehow, every time we encountered an obstacle, researchers would find a way around it within six months or a year.”

2016 saw the advent of OpenAI’s first large language model, GPT. From GPT-2 to GPT-3, the model’s capabilities became progressively more robust, substantiating the correctness of this route. With each release, OpenAI kept pushing the boundaries of people’s imaginations.

But Ilya Sutskever revealed that the internal expectations were low when ChatGPT, which really put OpenAI on the map, was launched: “When you ask it a factual question, it will give you a wrong answer. I thought it would be uninteresting, and people would say, ‘Why did you make this? This is so boring!'”

Packaging the GPT model into an easy-to-use interface and making it free to use allowed billions of people to realize for the first time what OpenAI was building. Before that, the large language model behind ChatGPT had already existed for several months.

The success of ChatGPT put the founding team in an unprecedented spotlight.

In 2023, OpenAI CEO Sam Altman spent most of the summer on a weeks-long outreach trip — talking with politicians and giving speeches in packed auditoriums around the world.

As the inaugural Chief Scientist, Ilya Sutskever kept a low profile, sparingly granting interviews, unlike the company’s other founding members who are public figures, choosing instead to focus more on GPT-4.

He showed no interest in discussing his personal life: “My life is simple. Go to work, then go home. I don’t do anything else. One can participate in many social activities, join many events, but I don’t.”

ChatGPT’s achievements brought him what

Earlier this year, Hinton publicly expressed fear about the technology he helped invent: “I’ve never seen a case where something of a much higher level of intelligence is controlled by something of a much lower level of intelligence.”

As Hinton’s student, Ilya Sutskever didn’t comment on this statement, but his concerns about the negative impacts of super intelligence show that they are on the same wavelength.

With the updates of GPT-4 and a series of more powerful large language models afterward, a part of the OpenAI members represented by Ilya Sutskever is increasingly worried about the controllability of AI.

Before the tumultuous “palace fight” incident at OpenAI, Ilya had accepted an interview with a journalist from MIT Technology Review.

He stated that his work focus has moved away from building the next generation of GPT or image-making machine DALL-E to researching how to prevent the uncontrollable hyper-intelligent artificial intelligence (which he considers a hypothetical future technology with insight) from going haywire.

“Sutskever also told me a lot of other things — that he thinks ChatGPT might be conscious (if you squint).” The reporter wrote.

Ilya Sutskever believes that the world needs to soberly recognize the true power of the technology that OpenAI and other companies are striving to create. He also believes that one day humans will choose to merge with machines.

Once the level of artificial intelligence surpasses humans, how should humans supervise an AI system that is much smarter than themselves?

In July 2023, OpenAI established the “Super Alignment” team with the goal of solving the alignment problem of super intelligent AI in four years. Ilya Sutskever was one of the leaders of this project, and OpenAI stated that it would dedicate 20% of its computational power specifically for the research of this project.

In an interview, Ilya Sutskever boldly predicted that if a model can predict the next word well, it means that it can understand the profound reality that led to the generation of that word. This means that if AI continues to develop along its existing trajectory, perhaps in the not so distant future, an AI system that surpasses humans will be born. However, what is more concerning is that “super artificial intelligence” might bring some unexpected negative consequences. That’s the significance of “alignment.”

The team’s first output was published in December 2023: Using a GPT-2 level small model to supervise a GPT-4 level large model can achieve close to GPT-3.5 level performance, pioneering a new research direction for empirical alignment of superhuman models.

At the same time, OpenAI announced a partnership with Eric Schmidt to launch a 10 million dollar funding program to support technical research to ensure the consistency and safety of superhuman AI systems.

With the departure of the two team leaders, the future of the Super Alignment team has become somewhat elusive.

Break with the OpenAI founding team

From today’s outcome, Ilya Sutskever seems to have had irreconcilable differences with the faction represented by Sam Altman.

OpenAI Chairman and Co-Founder Greg Brockman describes the process of the “palace fight” incident as follows:

Sam received a text message from Chief Scientist Ilya Sutskever requesting a conversation by noon on Friday. Sam joined the meeting via Google Meet, and all members of the board, except for Greg, attended. Ilya Sutskever told Sam that he would be fired and that the news would be released shortly.

Greg received a text message from Ilya Sutskever at 12:19 pm requesting a phone call as soon as possible. At 12:23, Ilya Sutskever received a Google Meet meeting link. Greg was informed that he would be removed from the board (but he was essential to the company and would keep his position), and Sam was already fired. At about the same time, OpenAI issued an announcement.

There have been interpretations of the announcement from OpenAI that Altman was not forthright enough for the board: OpenAI may have internally realized AGI, but the news wasn’t promptly updated to more personnel, and to prevent this technology from being widely applied without security assessment, Ilya and others pushed the emergency stop button.

According to The Information, during a company-wide meeting held by OpenAI on the very same day, Ilya Sutskever admitted to the employees calling it a “coup”. He said, “You can say it’s a coup, but I think it’s just the board fulfilling its duty.”

Of course, all this is speculation.

By the end of November, Sam Altman officially returned to OpenAI. With the dust settled, Ilya Sutskever did not immediately leave OpenAI, but he was not seen again in OpenAI’s San Francisco office.

Sam Altman expressed gratitude to Ilya Sutskever and wished to continue their working relationship: “I respect and love Ilya. I think he is a beacon in this field and a treasure to humanity. I’ve no malice towards him.”

Dalton Caldwell, the Managing Director of Investments at Y Combinator, once recalled, “I remember Sam Altman saying that Ilya was one of the most respected researchers in the world and that he thought Ilya could attract a lot of top AI talent. He even mentioned that world-renowned AI scholar Yoshua Bengio believed there could be no better choice for Chief Scientist of OpenAI than Ilya.”

The successor, Jakub Pachocki, might also be the result of careful consideration by the OpenAI board. Jakub joined OpenAI in 2017, which was also his first job after leaving school.

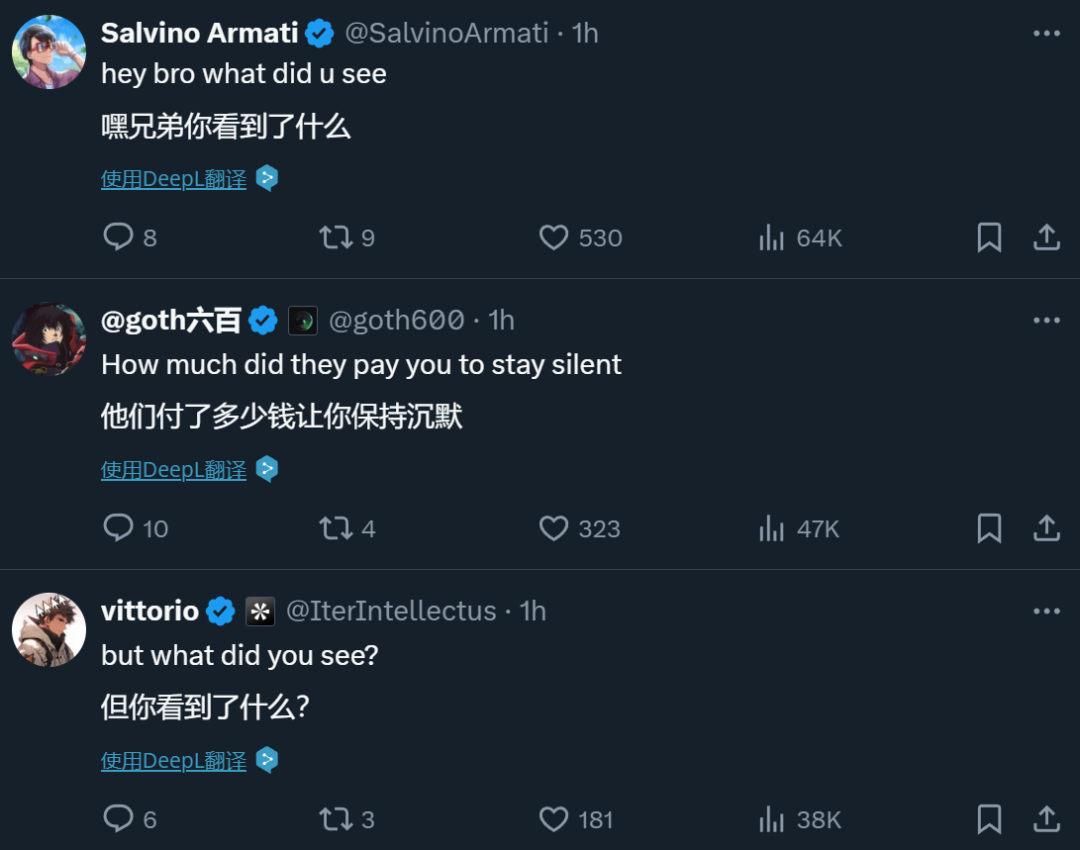

What did Ilya see?

Rumors about Ilya leaving OpenAI have been circulating for a long time. When the “palace feud” happened at OpenAI, and Sam Altman was expelled, it was rumored that Ilya had “seen something.” This “something” was powerful enough to make him worry about the future of AI and rethink its development. But what he saw remains unknown.

Actually, Ilya’s concerns did not arise only after ChatGPT came out. In a video recorded between 2016 and 2019, Ilya indicated that on the day AGI is realized, AI may not necessarily harbor hatred towards humans, but they may treat humans in the way that humans treat animals. People may not intentionally harm animals, but if you want to build an intercity highway, you won’t consult the animals, but will proceed without their consent. Faced with such a situation, AI might naturally make a similar choice. This is Ilya’s philosophy on AI.

This explains why Ilya is always apprehensive about the advancement of AI and why he focuses on the alignment of AI (making AI align with human values).

What’s next for Ilya?

As a fellow OpenAI founder who also left midway, Musk has extended an olive branch to Ilya Sutskever, suggesting he join Tesla or xAI. Could this be his next move?

Given that there are no non-compete regulations in California, Ilya Sutskever could immediately work for another company or establish his own company.

Another prominent OpenAI employee, Andrej Karpathy, even left OpenAI to join Tesla, then returned to OpenAI from Tesla, and recently announced his departure once again. At the time of the “palace feud” at the end of 2023, Karpathy was on vacation, completely an “outsider”, but he completed his resignation procedures earlier than Ilya.

What’s the truth? We don’t know if there will ever be a chance to find out.

Many of Ilya Sutskever’s statements might appear a bit wild, but they no longer seem “crazy” as they might have a year or two ago. As he said, ChatGPT has rewritten many people’s expectations for the future, turning “never going to happen” into “will happen faster than you think”