Google fights back: Project Astra confronts GPT-4o, Veo battles Sora, and the new version of Gemini transforms search.

This is Google‘s response to OpenAI.

A general AI, an AI that can be truly used daily, it would be embarrassing to hold a press conference if it’s not like this now.

On the early morning of May 15, the annual “Spring Festival Gala of the Technology World” Google I/O Developers Conference officially began. How many times was artificial intelligence mentioned in the 110-minute main Keynote? Google has counted it up:

Yes, AI is being talked about every minute.

The competition of generative AI has recently reached a new climax, and the content of this I/O conference naturally revolves around artificial intelligence.

“A year ago on this stage, we first shared our plans for the native multimodal large model, Gemini. It marked the new generation of I/O,” Google CEO Sundar Pichai said. “Today, we hope everyone can benefit from Gemini‘s technology. These groundbreaking features will penetrate into search, images, productivity tools, Android systems, and many other aspects.”

Just 24 hours ago, OpenAI preemptively released GPT-4o, shocking the world with real-time voice, video, and text interaction. Today, Google’s showcase of Project Astra and Veo directly marks OpenAI’s leading GPT-4o and Sora.

We are witnessing the highest-end commercial war, conducted in the most down-to-earth manner.

New version of Gemini Revolutionizes Google Ecosystem

At the I/O conference, Google demonstrated the search capabilities enhanced by the latest version of Gemini.

25 years ago, Google drove the first wave of the information age with search engines. Now, with the evolution of generative AI technology, search engines can better help you answer questions. They can better utilize context content, location awareness, and real-time information capabilities.

Based on the latest version of the customized Gemini model, you can present anything you can think of or need to accomplish to the search engine – from research to planning to imagination, Google will take care of everything.

Sometimes you want to get an answer quickly, but don’t have time to piece all the information together. At this time, the search engine will complete the job for you through AI summarization. Through AI summarization, AI can automatically access a large number of websites to provide the answer to a complex question.

With the multi-step reasoning feature of the custom Gemini, AI summarization will help solve increasingly complex issues. You no longer need to break down the question into multiple searches, now you can ask the most complex question once, along with all the subtleties and considerations you can think of.

In addition to finding the correct answer or information to complex questions, search engines can also work with you to make plans step by step.

At the I/O conference, Google strongly emphasized the multimodality and long text capabilities of large models. Technological advancement has made productivity tools like Google Workspace even more intelligent.

For example, we can now ask Gemini to summarize all the recent emails sent by the school. It will recognize the related emails in the background, and even analyze attachments like PDFs. Then, you can obtain a summary of the main points and action items therein.

If you’re traveling and can’t be present for a project meeting that lasts an hour. If the meeting was held on Google Meet, you can ask Gemini to introduce the key points to you. If there’s a group looking for volunteers and you’re available on that day, Gemini can assist you to write an email to apply.

Going further, Google saw more opportunities on large-model Agents, thinking of them as intelligent systems with reasoning, planning, and memory capabilities. The use of Agents can “think” several steps ahead and work across software and systems, helping you complete tasks more conveniently. This approach has already been demonstrated in products like search engines, where everyone can directly see the enhancement in AI capabilities.

At least in terms of integrated applications, Google is ahead of OpenAI.

Major update to the Gemini Family: Project Astra launched

Google has a natural advantage in terms of its ecosystem, but the foundation of large models is crucial. For this, Google has integrated the forces of its own team and DeepMind. Today, Hassabis also made his first appearance at the I/O conference, personally introducing the mysterious new model.

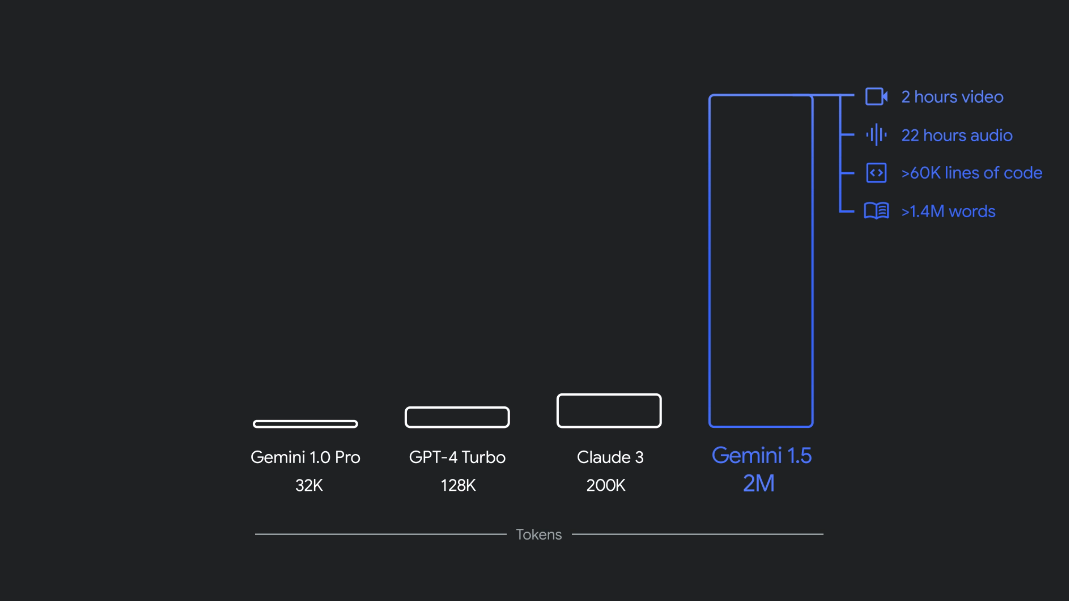

In December last year, Google launched its first native multimodal model, Gemini 1.0, available in three sizes: Ultra, Pro, and Nano. Just a few months later, Google released a new version, 1.5 Pro, whose performance was enhanced and the context window broke through 1 million tokens.

Now, Google has announced a series of updates introduced in the Gemini series of models, including the new Gemini 1.5 Flash (this is Google’s pursuit of speed and efficiency in a lightweight model) and Project Astra (this is Google’s vision for the future of AI assistants).

Currently, both 1.5 Pro and 1.5 Flash are available for public preview and offer a 1 million token context window in Google AI Studio and Vertex AI. Now, 1.5 Pro also provides a 2 million token context window for developers using the API and Google Cloud customers via a waiting list.

Additionally, Gemini Nano has been expanded from pure text input to image input. Later this year, starting with Pixel, Google will launch multimodal Gemini Nano. This means that mobile users can not only process text input but also understand more contextual information, such as visuals, sound, and spoken language.

The Gemini family welcomes a new member: Gemini 1.5 Flash

The new 1.5 Flash has been optimized for speed and efficiency.

1.5 Flash is the latest member of the Gemini model series and is also the fastest Gemini model in the API. It has been optimized for large-scale, high-volume, high-frequency tasks, making the service more cost-effective, and featuring a breakthrough long context window (1 million tokens).

Gemini 1.5 Flash has strong multimodal reasoning capabilities and has a breakthrough long context window.

1.5 Flash is skilled at summarizing, chatting applications, image and video subtitles, extracting data from lengthy documents and tables, etc. This is because 1.5 Pro has been trained through a process called “distillation”, migrating the most basic knowledge and skills from larger models into smaller, more efficient ones.

Gemini 1.5 Flash performance. Source https://deepmind.google/technologies/gemini/#introduction

Improved Gemini 1.5 Pro

The context window has expanded to 2 million tokens

Google mentioned that today there are more than 1.5 million developers using the Gemini model, and over 2 billion product users have employed Gemini.

In the past few months, Google not only expanded the context window of Gemini 1.5 Pro to 2 million tokens, but also enhanced its code generation, logical reasoning and planning, multi-turn dialogue as well as audio and image understanding abilities through improvements in data and algorithms.

1.5 Pro can now follow increasingly complex and detailed instructions, including those that specify product-level behavior related to roles, format and style. Moreover, Google has given users the ability to guide model behavior through system instruction settings.

Now, Google has added audio comprehension into the Gemini API and Google AI Studio, hence 1.5 Pro can now make inferences on the video images and audio submitted to Google AI Studio. Furthermore, Google has incorporated 1.5 Pro into Google products, including Gemini Advanced and Workspace applications.

The price for Gemini 1.5 Pro is $3.5 per one million tokens.

Actually, one of the most exciting transformations of Gemini is Google Search.

Over the past year, as part of the search generation experience, Google Search has answered billions of queries. Now, people can use it to search in entirely new ways, ask new types of questions, make longer, more complex queries, and even use photos to search, and gain the best information provided by the web.

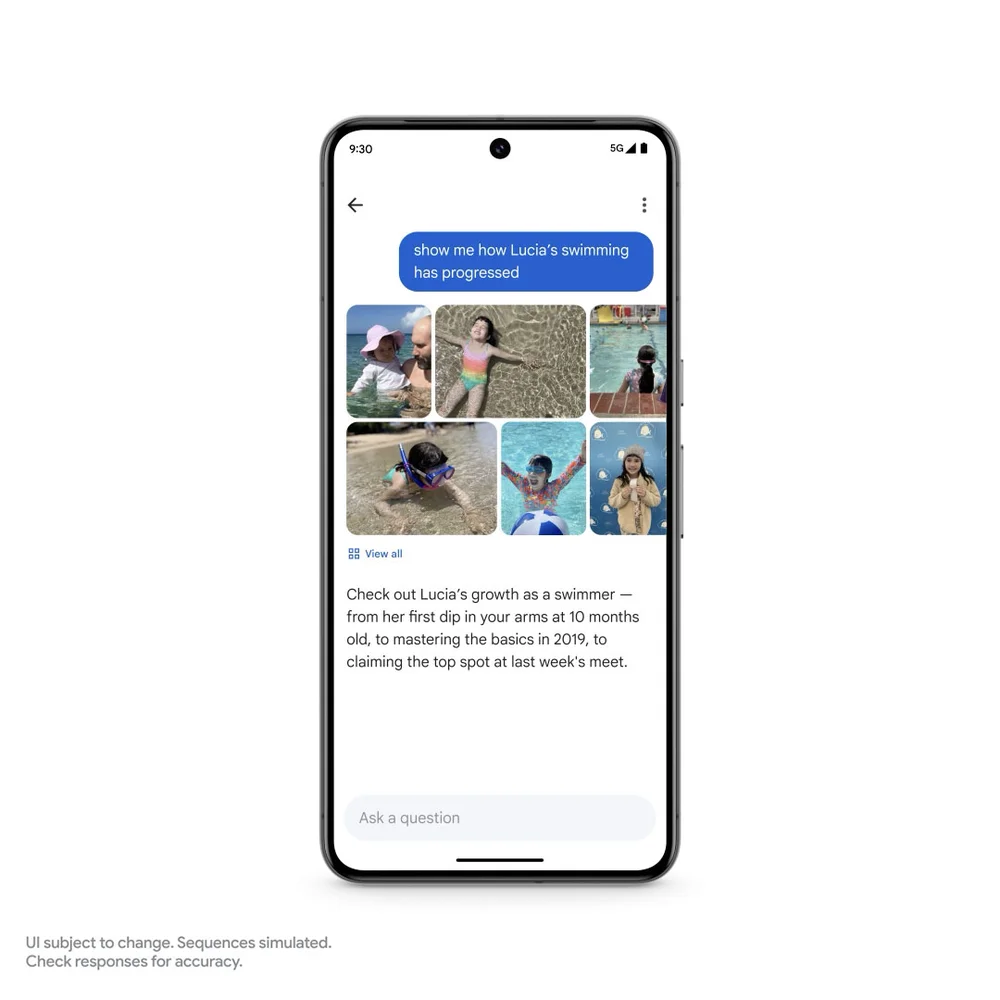

Google is about to launch the Ask Photos feature. Take Google Photos for instance, this feature was introduced about nine years ago. Nowadays, the number of photos and videos that users upload daily exceed 6 billion. People like using photos to search for their lives. Gemini makes all of this easier.

Suppose you’re paying in a parking lot, but you don’t remember your license plate number. Before, you could search for keywords in photos, and then scroll through years of photos to look for the plate. Now, you simply ask the photo.

Or, for instance, you recall the early life of your daughter Lucía. Now, you can ask the photo: When did Lucía learn to swim? You can also follow up on some more complex things: Tell me about Lucía’s swimming progress.

Here, Gemini goes beyond simple search, recognizing different backgrounds – including pools, the sea, and other scenes, the photo aggregates all information for the user’s convenience. Google will launch the Ask Photos feature this summer and will also launch more features.

New Generation Open Source Large Model Gemma 2

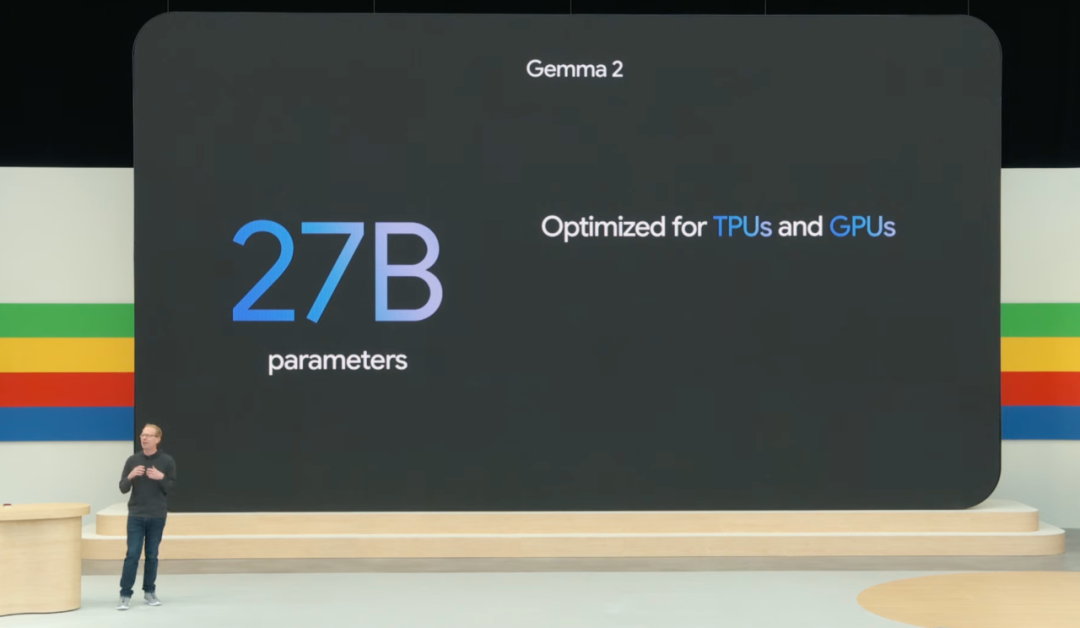

Today, Google also released a series of updates to the open source large model Gemma – Gemma 2 is here.

As introduced, Gemma 2 utilizes a new architecture aimed at achieving groundbreaking performance and efficiency, the new open sourced model parameters are 27B.

In addition, the Gemma family is also expanding with the expansion of PaliGemma, Google’s first visual language model inspired by PaLI-3.

General AI Entity Project Astra

AI entities have always been a focus of Google DeepMind’s research.

Yesterday, we witnessed the powerful real-time voice and video interaction capabilities of OpenAI’s GPT-4o.

Today, the visual and voice interaction general AI entity project Project Astra by DeepMind debuts, which is Google DeepMind’s vision for future AI assistants.

Google says that in order to really function, entities need to understand and respond to the complex, dynamic real world like humans, and also need to absorb and remember what they see and hear to understand the context and take action. In addition, the entity also needs to show initiative, be teachable and personalized, so that users can converse with it naturally, without lag or delay.

In the past few years, Google has been dedicated to improving the perception, reasoning, and conversation methods of the model, to render the interaction speed and quality more natural.

In today’s Keynote, Google DeepMind demonstrated the interactive capabilities of Project Astra.

Google developed the entity prototype based on Gemini, which can process information faster by continuously encoding video frames, combining video and voice input into the event timeline, and caching this information for efficient calls.

Through the voice model, Google also enhanced the pronunciation of the entity, providing the entity with a wider range of intonations. These entities can better understand the context they use and respond quickly in the conversation.

Just a brief comment here. According to Machine Heart, the interaction experience of the Project Astra demo is worse than the real-time performance of GPT-4o. Whether it’s response time, emotional richness of the voice, ability to be interrupted, the interaction experience of GPT-4o seems more natural. What do you readers think?

Counterattack Sora: Released Video Generation Model Veo

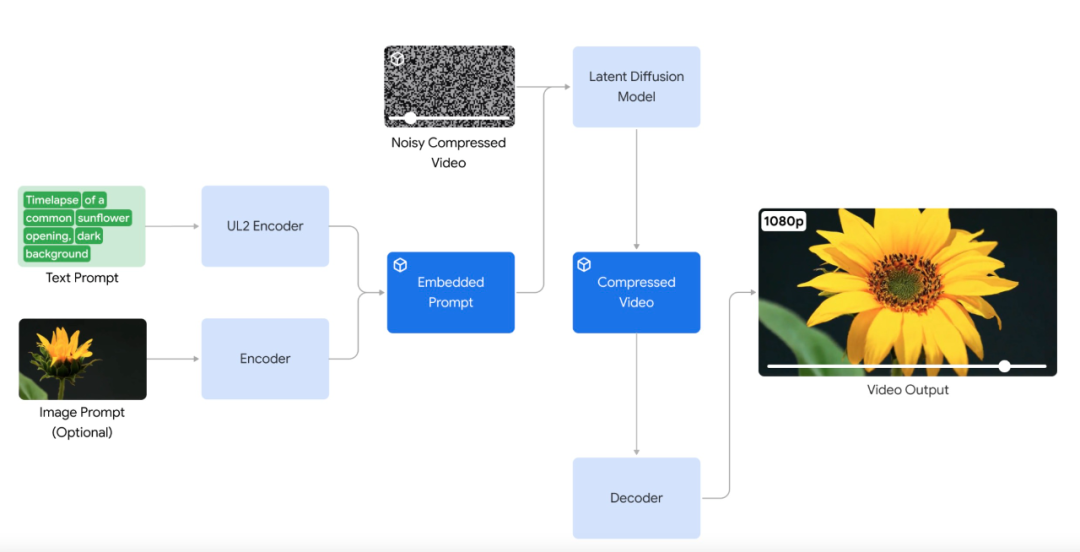

In terms of AI generated videos, Google announced the launch of video generation model Veo. Veo can generate high-quality 1080p resolution videos of various styles, with durations of over a minute.

With a deep understanding of natural language and visual semantics, the Veo model has made breakthroughs in understanding video content, rendering high-definition images, and simulating physical principles. The videos generated by Veo can accurately and finely express the user’s creative intentions.

Veo also supports using images and text together as a prompt to generate videos. By providing reference images and text prompts, the videos generated by Veo will follow the image style and user text instructions.

Interestingly, the demo that Google released is a “llama” video generated by Veo, which easily reminds people of Meta’s open source model series Llama.

When it comes to long videos, Veo can produce videos of 60 seconds or even longer. It can do this through a single prompt or by providing a series of prompts that together tell a story. This is key for the application of video generation models in film and television production.

Veo is based on Google’s work in visual content generation, including Generative Query Network (GQN), DVD-GAN, Image-to-Video, Phenaki, WALT, VideoPoet, Lumiere, and others.

Starting today, Google will provide a preview version of Veo in VideoFX for some creators and creators can join Google’s waitlist. Google will also introduce some capabilities of Veo into products like YouTube Shorts.

New Text-to-Image Model Imagen 3

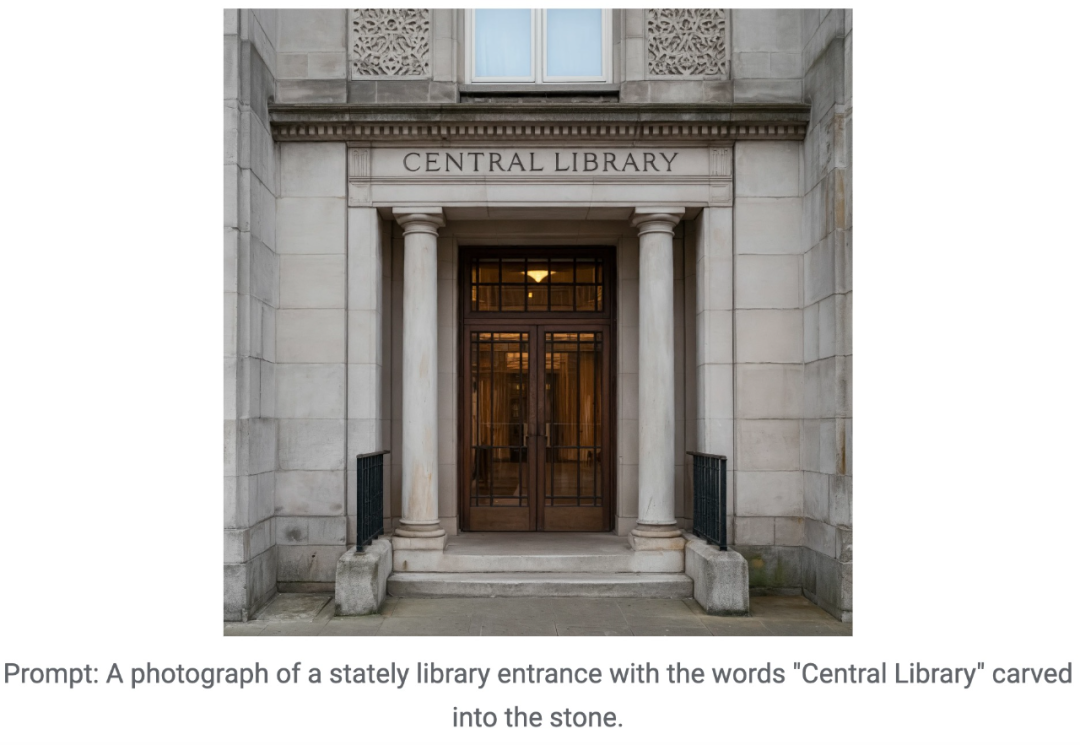

In the field of text-to-image generation, Google has again upgraded its series of models – releasing Imagen 3.

Imagen 3 has been optimized and upgraded in the generation of details, lighting, interference, and its understanding of prompts has significantly improved.

To help Imagen 3 capture details from longer prompts, like specific camera angles or compositions, Google added richer details to each image title in the training data.

For example, by adding “slightly out of focus in the foreground,” or “warm light,” to the input prompts, Imagen 3 can generate images according to the requirements:

In addition, Google specifically improved the “text blur” issue in image generation, that is, optimizing image rendering to make the text in the generated image clear and stylized.

To improve usability, Imagen 3 will provide multiple versions, each optimized for different types of tasks.

Starting today, Google provides a preview version of Imagen 3 in ImageFX for some creators, and users can register to join the waitlist.

Sixth Generation TPU Chip Trillium

Generative AI is changing the way humans interact with technology, and it also provides tremendous efficiency opportunities for businesses. But these advances require more computing, memory, and communication capabilities to train and fine-tune the most powerful models.

For this reason, Google has launched the sixth-generation TPU Trillium, the most powerful and energy-efficient TPU to date, which will officially go online at the end of 2024.

TPU Trillium is a highly customized AI-specific hardware. The multiple innovations announced at this Google I/O conference, including Gemini 1.5 Flash, Imagen 3, and Gemma 2, new models, are all trained on TPU and used to provide services with it.

Compared to TPU v5e, the peak computing performance per chip of the Trillium TPU has increased by 4.7 times. It also doubles the high bandwidth memory (HBM) and the inter-chip interconnect (ICI) bandwidth. In addition, Trillium is equipped with the third-generation SparseCore, specifically used to handle the ultra-large embedding common in advanced ranking and recommendation workloads.

Google stated that the Trillium can train the new generation of AI models at a faster speed, while reducing latency and lowering costs. In addition, Trillium is also referred to as Google’s most sustainable TPU so far, with its energy efficiency improved by more than 67% compared to its predecessor.

Trillium can be expanded to up to 256 TPUs (Tensor Processing Units) in a single high-bandwidth, low-latency computing cluster (pod). In addition to this cluster-level scalability, through multi-chip technology (multislice technology) and Intelligent Processing Units (Titanium Intelligence Processing Units, IPUs), Trillium TPU can scale up to hundreds of clusters, connecting thousands of chips, forming a supercomputer interconnected by multi-petabit-per-second data center networks.

Google launched its first TPU v1 as early as 2013 and then launched the cloud TPU in 2017. These TPUs have been supporting various services such as real-time voice search, photo object recognition, language translation, and even providing technical power for products of autonomous driving car companies like Nuro.

Trillium is also part of the Google AI Hypercomputer, which is a pioneering supercomputing architecture designed to handle cutting-edge AI workloads. Google is working with Hugging Face to optimize the hardware for open-source model training and services.

Above is all the key content from today’s Google I/O conference. It can be seen that Google has entered into comprehensive competition with OpenAI in the field of large model technology and products. Through the releases by OpenAI and Google these two days, we can also find that the competition of large models has entered a new stage: multimodal, more natural interactive experience has become the key to the productization of large model technology and acceptance by more people.

Looking forward to 2024, the innovation of large model technology and products can bring us more surprises.Gemini