The original author led the team, and LSTM truly made a comeback!

LSTM: In this rebirth, I’m going to take back everything that Transformer took away.

In the 1990s, the Long Short-Term Memory (LSTM) method introduced the core concepts of constant error carousel and gating. For over three decades, LSTM has stood the test of time and contributed to numerous successful cases of deep

learning. However, after the emergence of Transformer with parallel self-attention at its core, LSTM’s inherent limitations meant it was no longer in the limelight.

Just when everyone thought that Transformer was firmly entrenched in the field of language models, LSTM made a comeback – this time, as xLSTM.

On May 8, Sepp Hochreiter, the proposer and founder of LSTM, uploaded a preprint of the xLSTM paper on arXiv.

The paper also included a company called “NXAI” in its affiliated institutions. Sepp Hochreiter commented: “With xLSTM, we have narrowed the gap with the existing state-of-the-art LLM. With NXAI, we have started to build our own European LLM.”

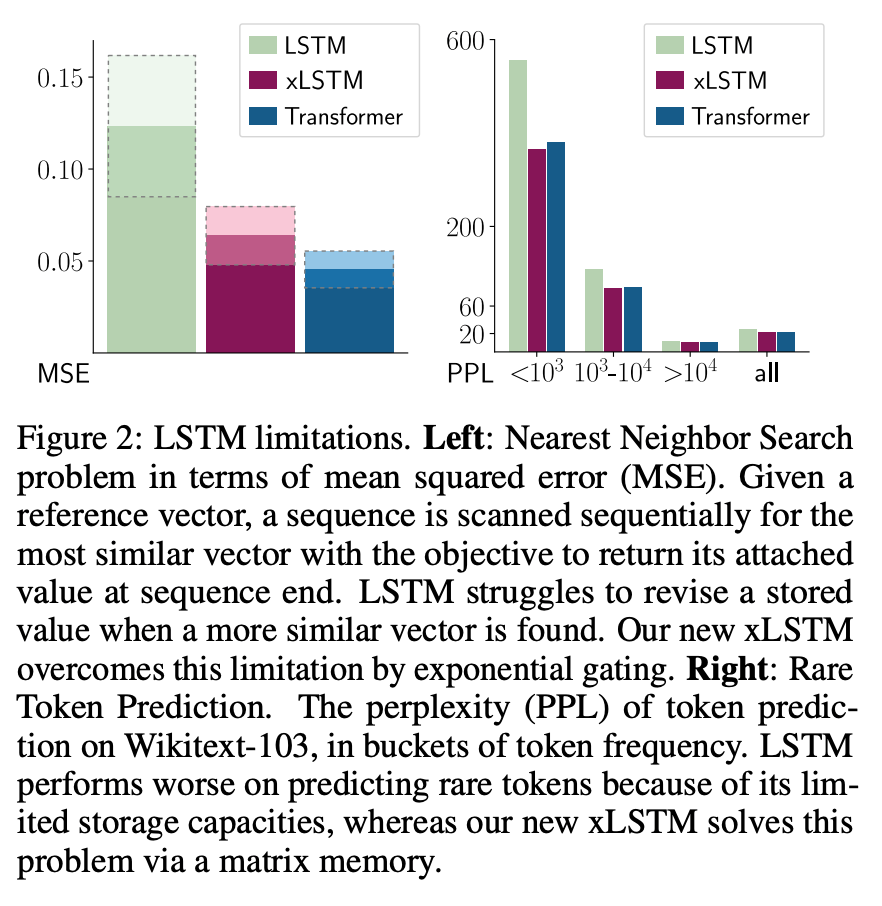

- Paper Title: xLSTM: Extended Long Short-Term Memory

- Paper Link: https://arxiv.org/pdf/2405.04517

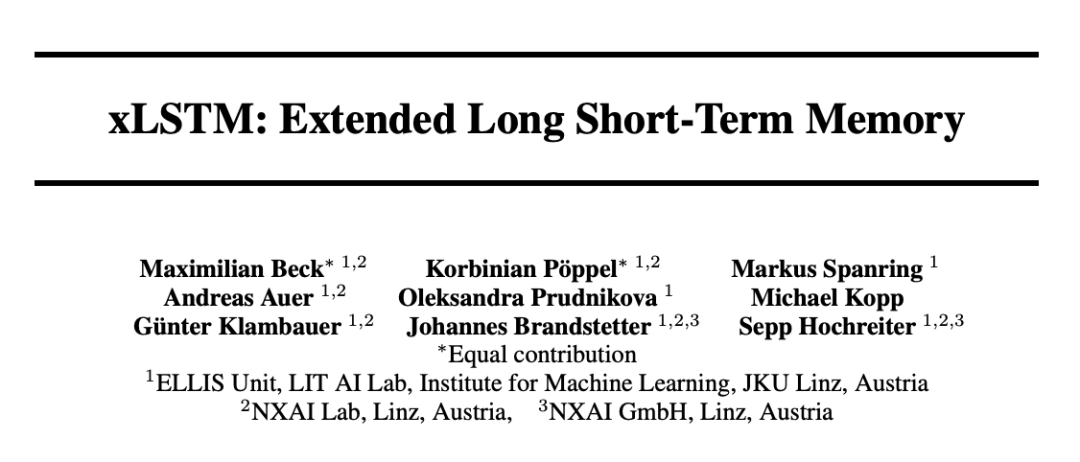

Specifically, xLSTM addresses three limitations of the traditional LSTM:

(i) Inability to modify storage decisions.

This limitation can be illustrated by the “Nearest Neighbor Search” problem: given a reference vector, the sequence must be scanned in order to find the most similar vector, so that its additional value can be provided at the end of the sequence. The left side of Figure 2 shows the mean square error of this task. When a more similar vector is found, LSTM finds it difficult to modify the stored value, whereas the new xLSTM overcomes this limitation through exponential gating.

(ii) Limited storage capacity, i.e., information must be compressed into scalar unit states.

The right side of Figure 2 shows the token prediction perplexity for different token frequencies on Wikitext103. Due to LSTM’s limited storage capacity, it performs poorly on infrequent tokens. xLSTM addresses this issue through matrix memory.

(iii) Due to memory mixing, there is a lack of parallelism, necessitating sequential processing. For example, the hidden-to-hidden connections between the hidden states from one time step to the next.

At the same time, Sepp Hochreiter and his team answered a key question in this new paper::What kind of performance can be achieved when these limitations are overcome and LSTM is scaled to the size of current large language models?

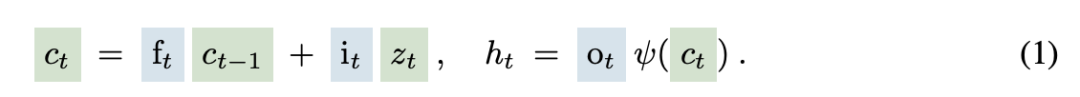

To overcome the limitations of LSTM, xLSTM made two major modifications to the LSTM concept in equation (1).

In the original LSTM, the constant error carousel is updated by the unit input z_t to the unit state c_(t-1) (green), and regulated by the sigmoid gate (blue). The input gate i_t and the forget gate f_t control this update, while the output gate o_t controls the output of the storage unit, or the hidden state h_t. The state of the storage unit is normalized or compressed by ψ, then controlled by the output gate to get the hidden state.

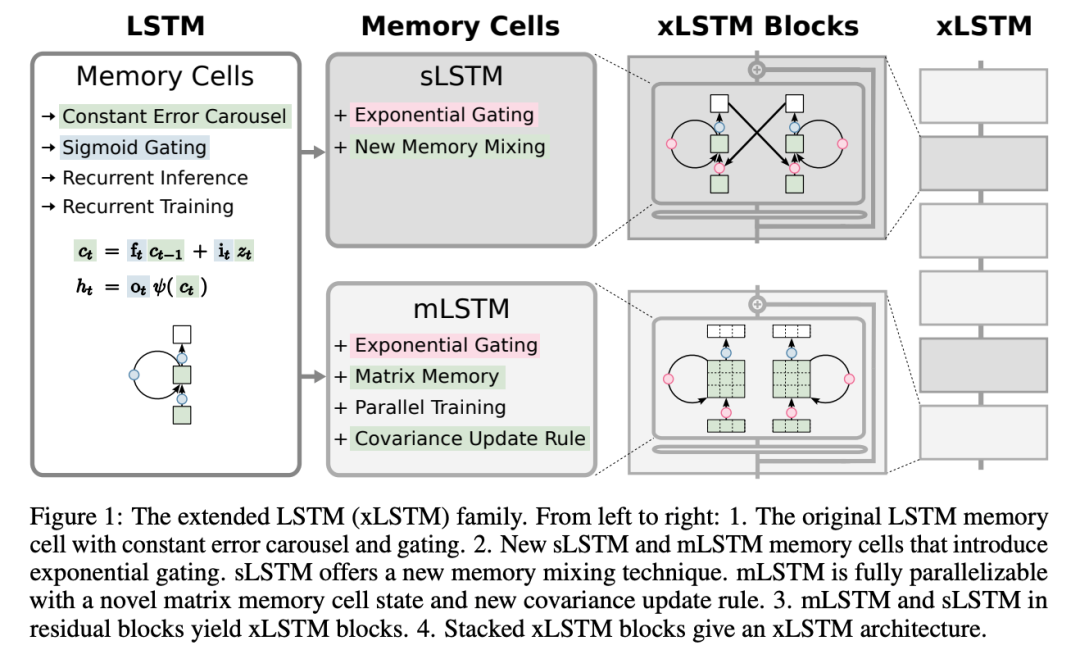

The modifications in xLSTM include exponential gating and a novel memory structure, thus enriching two members of the LSTM family:

(i) sLSTM (Section 2.2), with scalar memory, scalar update, and memory mixing features;

(ii) mLSTM (Section 2.3), with matrix memory and covariance (outer product) update rules, fully parallelizable.

Both sLSTM and mLSTM enhance LSTM through exponential gating. In order to facilitate parallel processing, mLSTM abandons memory mixing, i.e., hidden-hidden recursive connections. Both mLSTM and sLSTM can be expanded to multiple storage units, with sLSTM featuring cross-unit memory mixing. Moreover, sLSTM can have multiple heads, but there is no memory mixing across heads, only memory mixing between units within each head. By introducing sLSTM heads and exponential gating, researchers have established a new form of memory mixing. For mLSTM, multiple heads and units are equivalent.

By integrating these new LSTM variants into the residual block module, xLSTM blocks are formed. Stacking these residual xLSTM blocks into the architecture results in the xLSTM architecture. The xLSTM architecture and its components can be seen in Figure 1.

The xLSTM block should linearly summarize the past in the high-dimensional space in order to better separate different histories or contexts. Separating history is a prerequisite for correctly predicting the next sequence element (such as the next token). In this case, the researchers applied the Cover theorem, which states that in high-dimensional space, nonlinear embedding patterns are more likely to be linearly separated than they are in the original space.

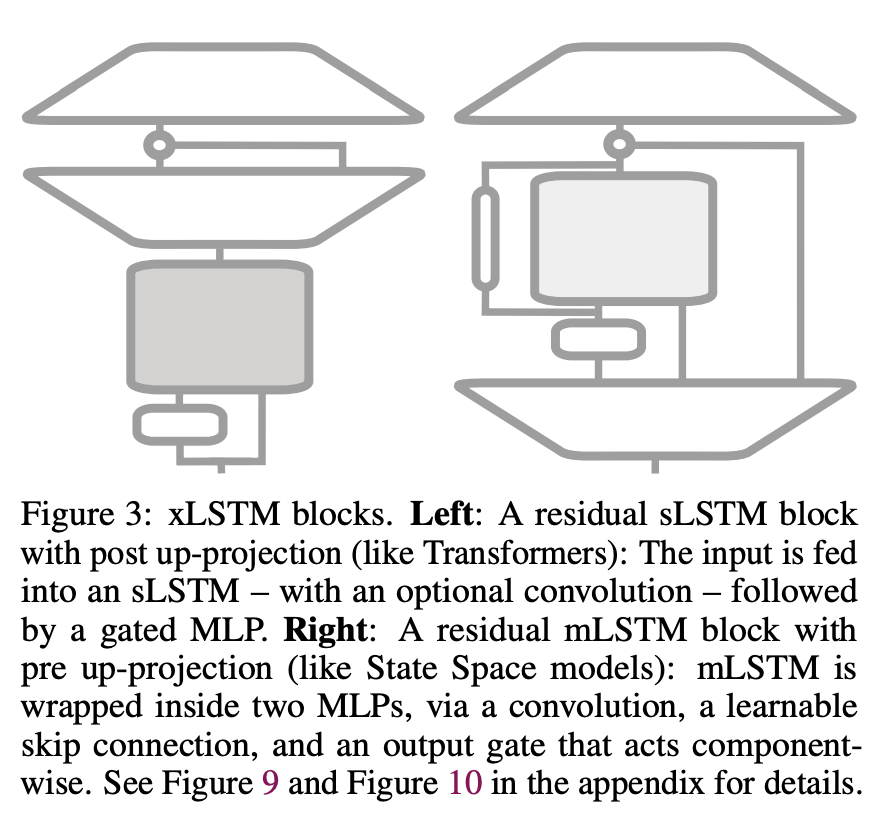

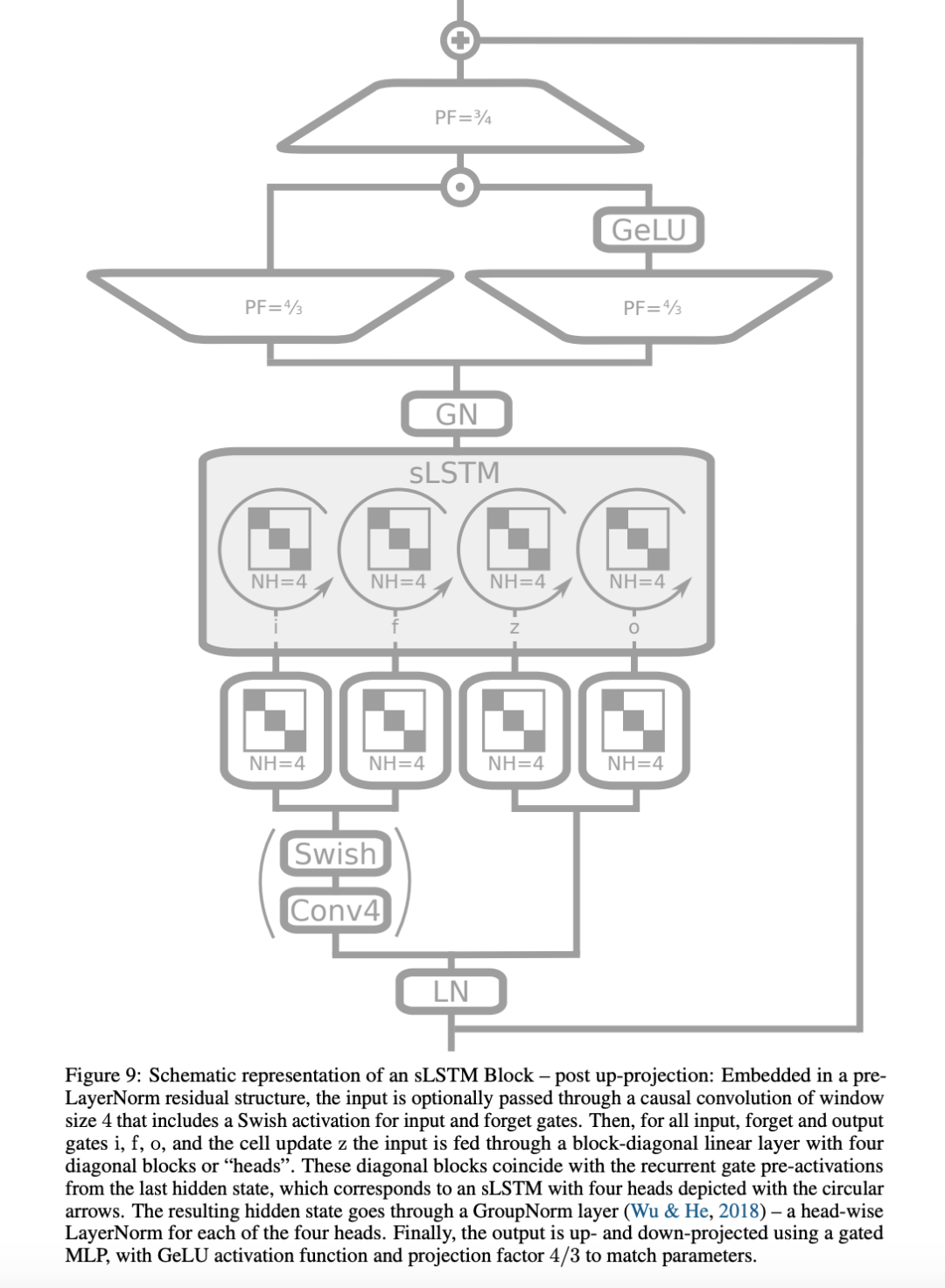

They considered two types of residual block structures: (i) post up-projection residual blocks (like Transformer), which non-linearly summarize history in the original space, then linearly map it to high-dimensional space, apply non-linear activation functions, and linearly map it back to the original space (left side of Figure 3 and third column of Figure 1, a more detailed version is shown in Figure 9). (ii) pre up-projection residual blocks (like state-space models), which linearly map to high-dimensional space, non-linearly summarize history in high-dimensional space, and then linearly map it back to the original space. For the xLSTM blocks with sLSTM, the researchers primarily use post up-projection blocks. For xLSTM blocks with mLSTM, they use pre up-projection blocks because the memory capacity increases in high-dimensional space.

Experiment

Next, the researchers carried out experimental evaluations on xLSTM, comparing it with existing language modeling methods.

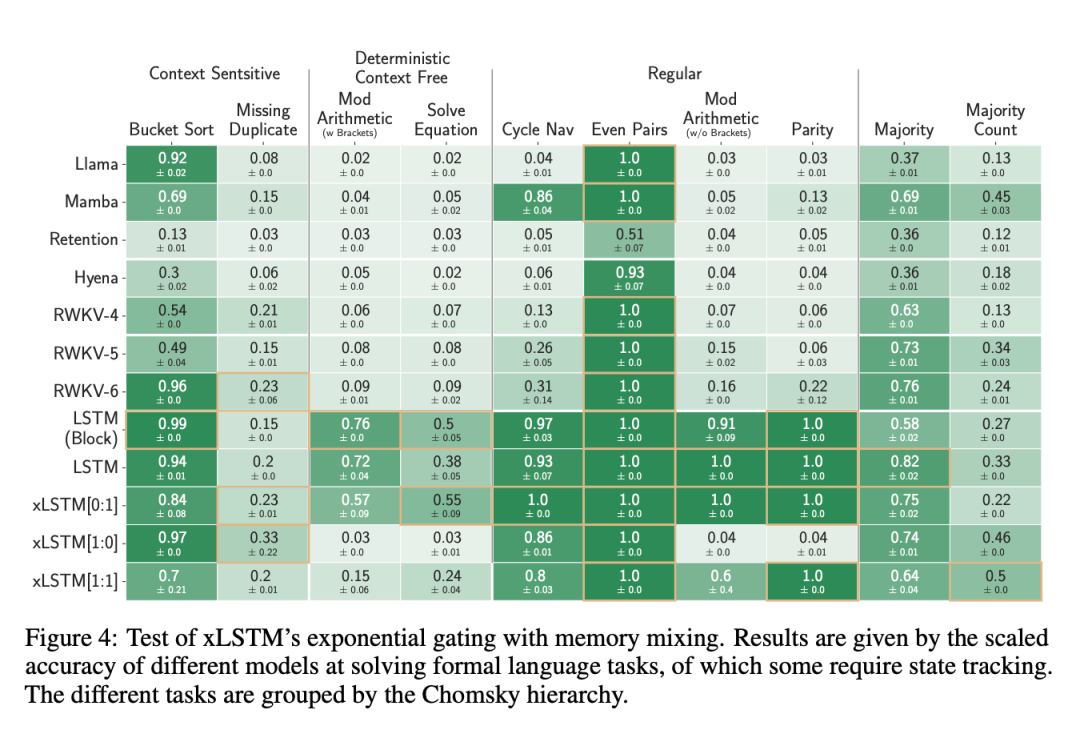

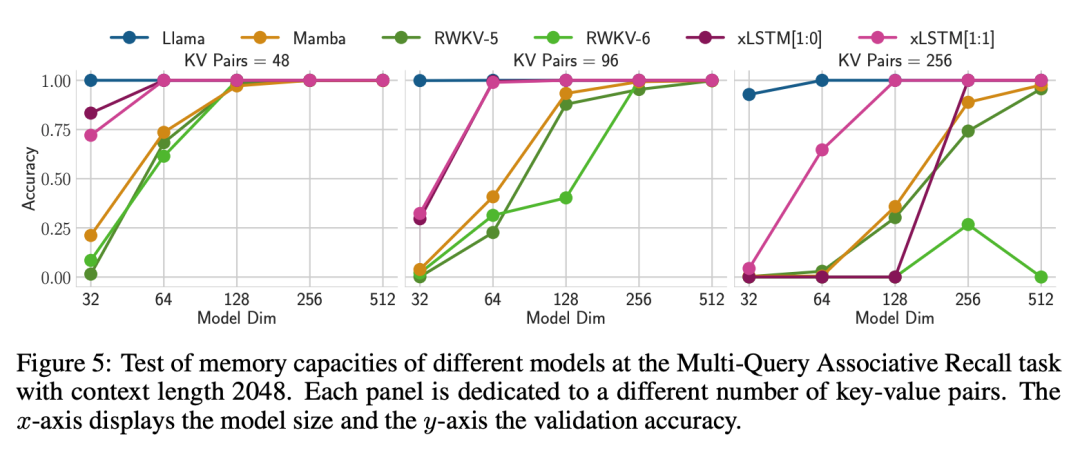

Section 4.1 discusses the specific capabilities of xLSTM in synthesis tasks. First, the researchers tested the effectiveness of the new exponential gating and memory mixing of xLSTM in formal languages. Then, they evaluated the effectiveness of xLSTM’s new matrix memory in Multi-Query Associative Recall (MQAR) tasks. Lastly, the researchers assessed the performance of xLSTM in handling long sequences in the Long Range Arena (LRA).

Section 4.2 compares the validation set complexities of various current language modeling methods, including ablation studies on xLSTM on the same dataset, followed by the evaluation of the scaling behavior of different methods.

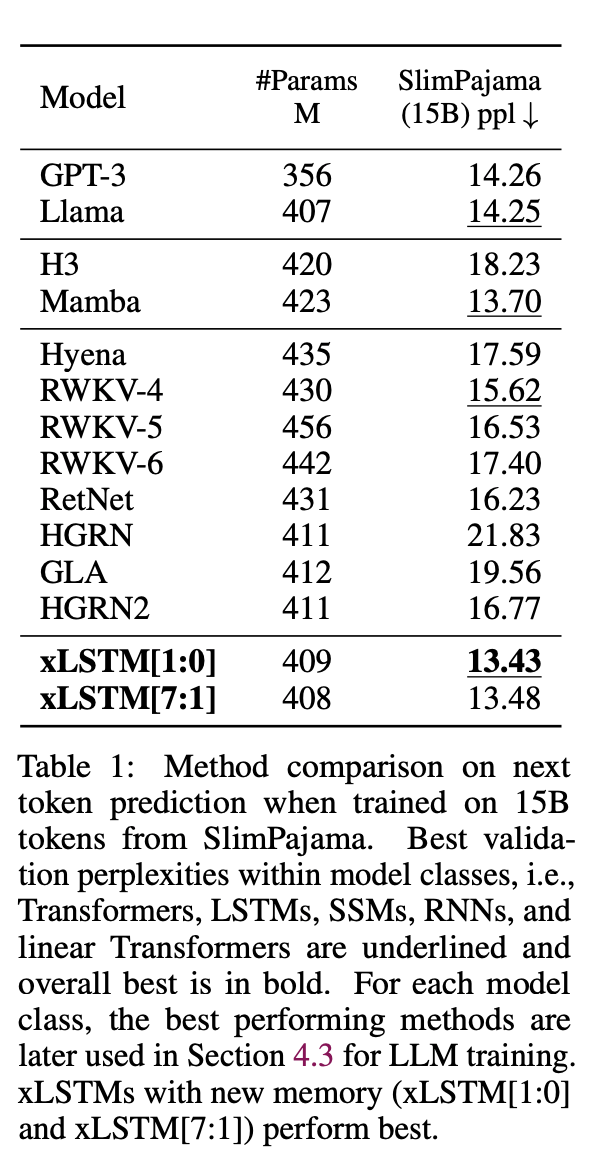

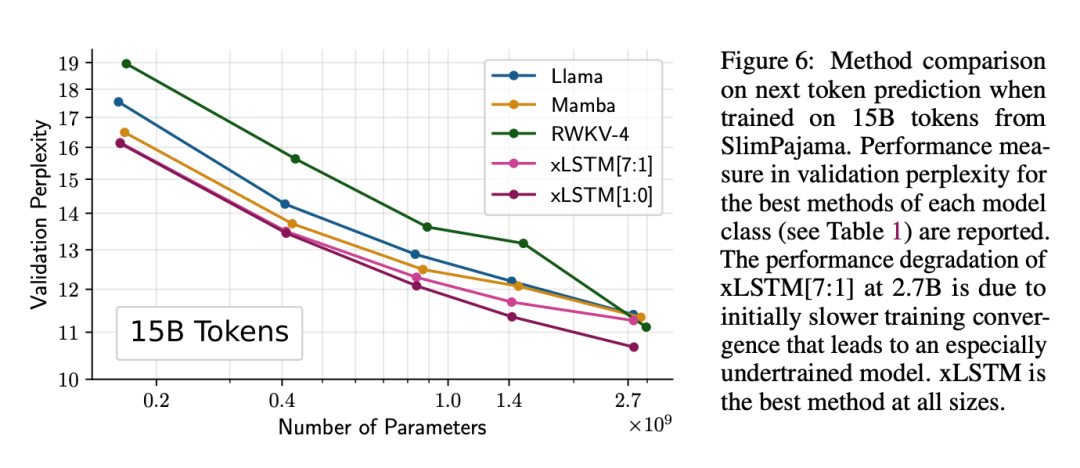

The researchers trained models like xLSTM, Transformers, State Space Models (SSM), etc. in the autoregressive language modeling setting using 15B tokens of SlimPajama. The results in Table 1 show that xLSTM outperforms all existing methods in terms of validation complexity.

Figure 6 shows the scaling results of the experiment, indicating that xLSTM also performs well for larger scale models.

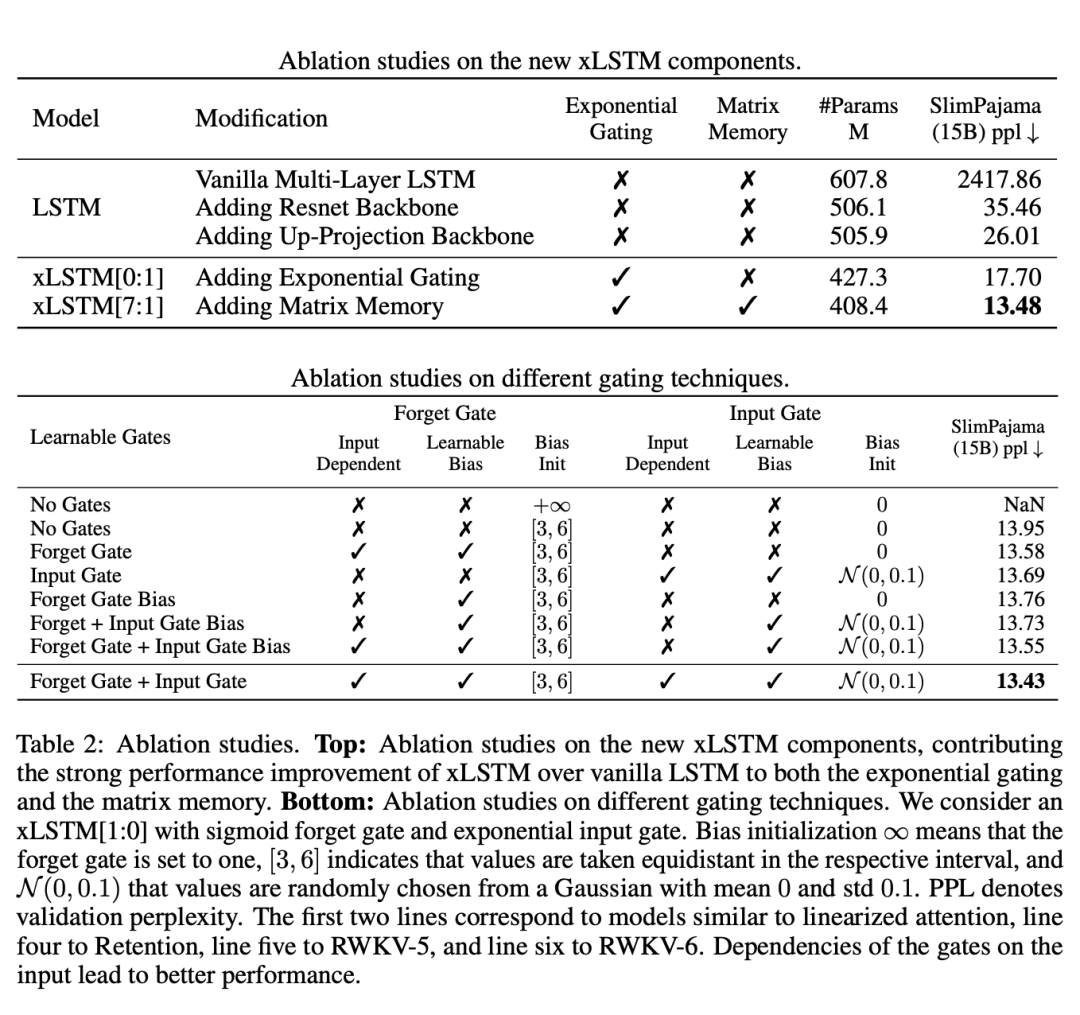

The ablation study shows that the performance improvement stems from the exponential gating and matrix memory.

Section 4.3 conducted more in-depth language modeling experiments.

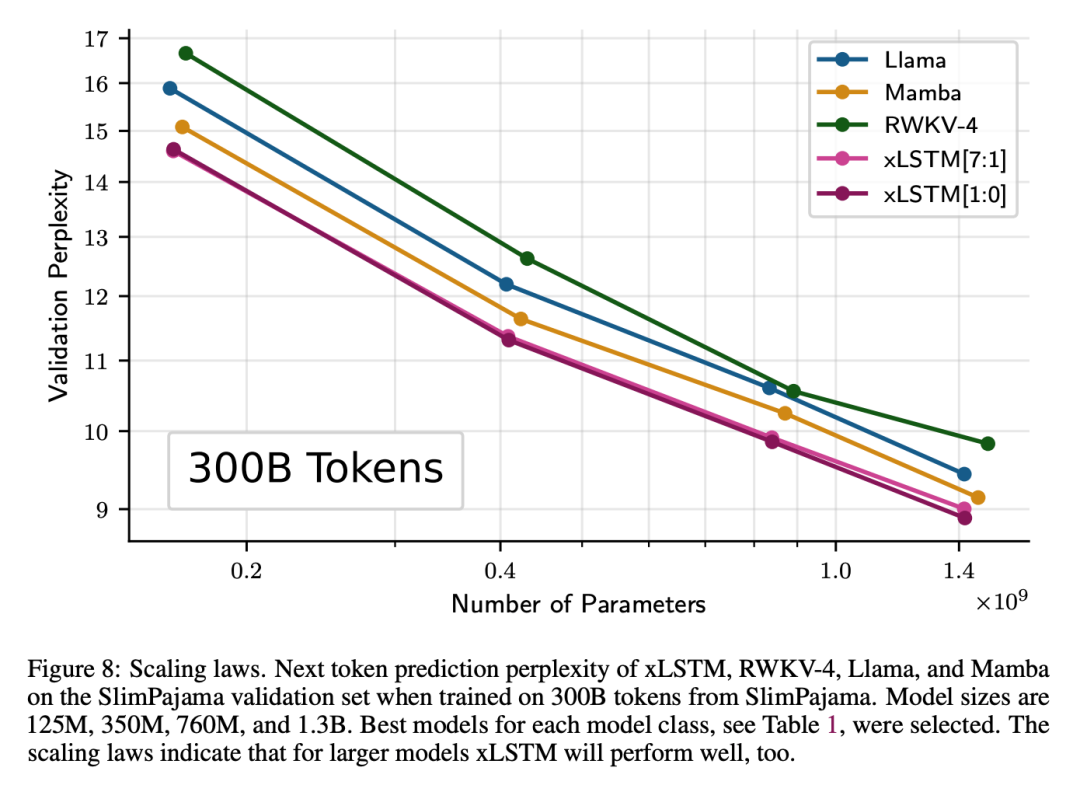

The researchers increased the volume of training data, trained with 300B tokens from SlimPajama, and compared xLSTM, RWKV-4, Llama, and Mamba. They trained models of different sizes (125M, 350M, 760M, and 1.3B) and conducted in-depth evaluations. Firstly, they evaluated the performance of these methods when inferring longer contexts; secondly, they tested these methods by verifying the perplexity and performance of downstream tasks; in addition, these methods were evaluated on 571 text domains in the PALOMA language benchmark dataset; finally, they evaluated the scaling behavior of different methods, but used 20 times more training data.

It can be seen that xLSTM is superior in both performance and scalability.

For more research details, please refer to the original paper.