Apple launches AI cloud server plan, using M2 Ultra chip directly.

Other tech companies: scrambling for H100, B200; Apple: using M2 as server AI chip.

Despite not being as high-profile as competitors like Google, Meta and Microsoft in generative AI, Apple has always been conducting related research, with a stance of building a new ecosystem that always seems unique.

On the evening of May 7, Apple responded to public attention at a special spring new product launch event: “Moving beyond the incredibly powerful M3 chip, directly to the next generation – M4 chip”.

When Apple announced the new M4 chip, it emphasized its advanced AI performance, calling its newly designed 16-core Neural Engine “the most powerful in Apple’s history”.

The excitement in everyone’s hearts hasn’t passed yet, expressing that Apple’s latecomer advantage is an advantage, finally taking the opportunity to rise. Just when other manufacturers are working hard to research the next-generation chip and apply it to AI tasks, Apple took a different path, made a comeback, and focused on the cloud service chip.

Apple directly put its PC-side chip M2 Ultra onto cloud servers.

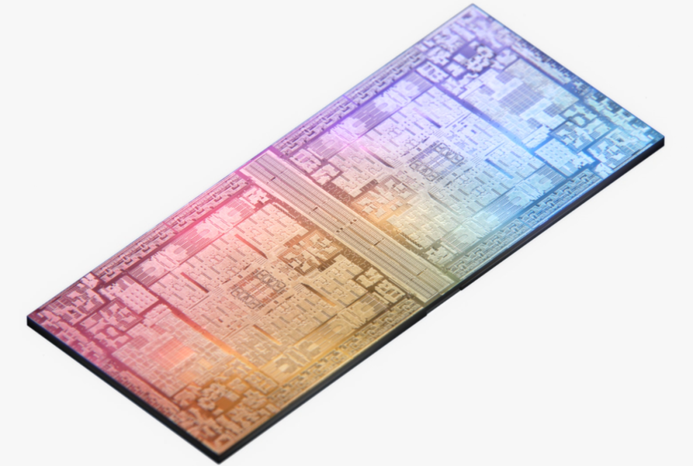

Apple’s UltraFusion packaging architecture uses Silicon Interposer technology, connecting two M2 Max chips together to create the M2 Ultra. It has a 32-core Neural Engine, providing 31.6 trillion operations per second, which is 40% faster than the M1 Ultra.

According to media reports by Bloomberg and others, Apple Inc. will launch some AI features this year through data centers equipped with its own processors. This is a large-scale action the company is aggressively implementing, aiming to infuse its devices with AI capabilities.

Informed sources revealed that Apple is placing high-end chips similar to those designed for Macs in cloud computing servers, and these servers aim to handle the most advanced AI tasks that are about to enter Apple devices.

Insiders say simpler functions related to artificial intelligence will be processed directly on iPhones, iPads, and Macs, but they ask not to be named because the plan is still confidential.

This move is part of Apple’s eager push for a generative AI plan. Since the launch of ChatGPT, global tech companies have been in a generative AI arms race. There were previous rumors that Apple is expected to propose its ambitious AI strategy at the global developer conference on June 10th.

About three years ago, Apple planned to use its own chips and process artificial intelligence tasks in the cloud, but accelerated its timeline due to the AI craze driven by OpenAI’s ChatGPT and Google’s Gemini.

Although Apple is pushing the future version based on the M4 chip, the first batch of Apple’s self-developed AI server chips will be the M2 Ultra launched last year as part of the Mac Pro and Mac Studio computers.

For those market players who are hoping that Apple can go against the trend in the AI field, this move seems to finally bring a little dawn.

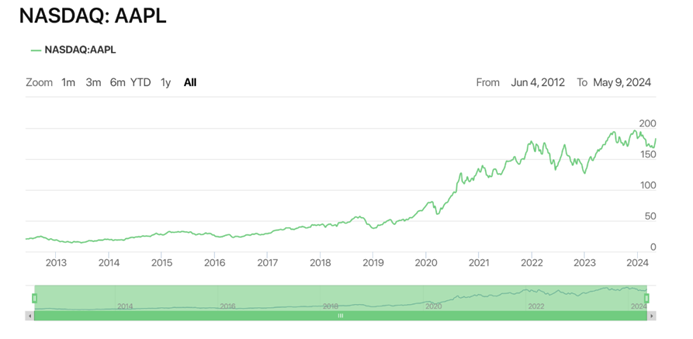

After Bloomberg reported the details, Apple’s stock price reached a historical high of 184.59 US dollars during the New York trading session. However, the stock’s annual decline is more than 4%, and in response to the news of M2 Ultra going to the cloud, representatives of Apple refused to comment.

Apple is showing momentum in the NASDAQ market.

In addition to determining the chips that carry tasks in the cloud, Apple also provides two paths of computational power usage depending on the complexity of AI tasks.

Relatively simple AI tasks, such as providing users with summaries of notifications they’ve missed on their iPhones or upcoming text messages, can be handled by the chips within Apple devices.

More complex tasks, like generating images or summarizing long news articles, and creating long replies in emails, may require cloud processing (this is also the case with the upgraded version of Apple’s Siri voice assistant).

This seems to align with the concept of ‘edge-cloud combination’ big model mentioned by other smartphone manufacturers. This move, which will be part of the launch of Apple’s iOS 18 in the autumn, represents a shift by the company. For many years, Apple has prioritized device-side processing and promoted it as a ‘better way to ensure security and privacy’.

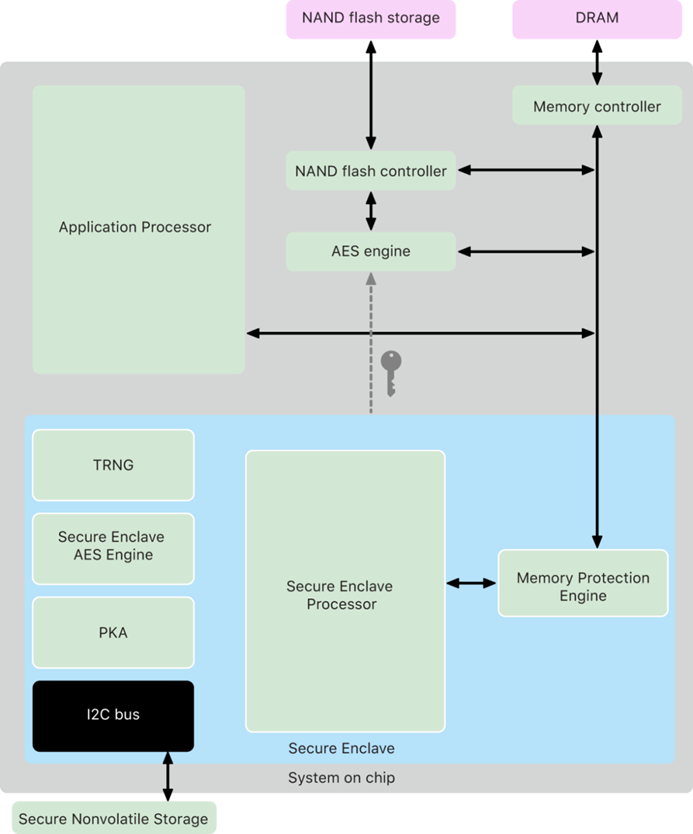

But those involved in creating the Apple server project (codenamed ACDC) indicate that components inside its processor can already protect user privacy. The company has adopted a method called ‘Secure Enclave’, which can isolate data to prevent security vulnerabilities.

Secure Enclave is a dedicated security subsystem for the latest versions of iPhone, iPad, Mac, Apple TV, Apple Watch, and HomePod.

Currently, Apple plans to operate its cloud functions using its own data centers, but it will eventually rely on external facilities, just like it does with iCloud and other services. The Wall Street Journal also reported earlier on certain aspects of this server plan.

Apple’s CFO Luca Maestri hinted at this approach during a financial earnings call last week, stating: “We have our own data center capacity, and only then do we need to use third-party capacity.”

When asked about the company’s AI infrastructure, he said, “This is a model that has historically worked for us, and we plan to continue down the same path in the future.”

Device-side AI computational power will still be a crucial part of Apple’s AI strategy.

However, some of these functions will require its latest chips, such as the A18 chip launched on the iPhone last year and the M4 chip launched in the iPad Pro earlier this week. These processors include significant upgrades to the Neural Engine, which is a part of the chip that processes AI tasks.

In response to the demands for generative AI, Apple is rapidly upgrading its product line to introduce more powerful chips.

Firstly, the new generation processor M4, will soon be incorporated into the Mac computer series.

The brand-new M4 chip is composed of 28 billion transistors, built based on the second-generation 3nm technology, and brings a series of enhancements in terms of CPU, GPU, and NPU.

According to a Bloomberg report in April, the Mac mini, iMac, and MacBook Pro will receive the M4 chip later this year, while the M4 chip will be installed in the MacBook Air, Mac Studio, and Mac Pro next year.

These plans together lay the groundwork for Apple to integrate AI into most of its product lines. The company will focus on providing features that make users’ everyday lives easier, such as providing suggestions and personalized experiences.

Although Apple does not plan to launch its own ChatGPT-style services, it has been discussing the possibility of offering this option through partnerships.

Just last week, Apple said, “Running AI on its devices will help it stand out among competitors.”

Apple’s CEO Tim Cook stated on a financial earnings call that, “We believe in the transformative power and promise of AI, and we believe we have advantages that can make us unique in this new era, including the unique combination of Apple’s seamlessly integrated hardware, software, and services.”

Cook stated that Apple’s self-developed chips will give it an advantage in this still nascent field, adding that the company’s focus on privacy “supports everything we create.”

According to insiders, the company has invested hundreds of millions of dollars in cloud plans over the past three years. However, there is still a gap in the services it provides.

Furthermore, for users who wish to use ChatGPT, Apple has had discussions with Google and OpenAI, with plans to integrate it into the iPhone and iPad.

Apple’s negotiations with OpenAI have recently reached an advanced stage, indicating that cooperation is likely to materialize. Someone familiar with the discussions stated that, “Apple could potentially offer a range of options from external companies.”